By David Deady

The surge of integration of AI in hiring has sparked a critical debate on its ethical implications. While AI promises efficiency and objectivity, it also raises concerns about bias, privacy, and transparency. As companies increasingly rely on algorithms to screen resumes, conduct interviews, and compose outreach, questions about fairness and accountability become paramount. Can AI truly eliminate human prejudice, or does it merely mask underlying biases in data?

The potential of AI in hiring and recruiting is huge. But so are the dangers. Left unchecked and unscrutinised, this tech has the capabilities to cause significant harm. In this article we traverse the ethical landscape of AI-driven recruitment, exploring the delicate balance between innovation and integrity, and the steps necessary to ensure that technology enhances, rather than hinders, fair employment practices.

The Promise of AI in Recruitment

As HBR states:

“These tools put unprecedented power in the hands of organizations to pursue data-based human capital decisions.”

AI technologies offer several advantages in the hiring process. Some of the primary benefits include the ability to process large volumes of applications quickly and efficiently, provide large-scale, detailed feedback to candidates, and automating administrative structures. We can all attest that traditional recruitment methods can be time-consuming and prone to human error, whereas AI systems can analyze resumes, manage processes, and identify the most qualified candidates in a fraction of the time.

Additionally, AI has the potential to reduce bias in hiring. Human recruiters, despite their best efforts, can be influenced by unconscious biases. AI algorithms, if designed correctly, can help mitigate these biases by focusing solely on what a candidate can bring to a role rather than factors such as gender, race, or age. For instance, companies like HireVue and Pymetrics use AI to evaluate candidates’ skills and fit for a role based on objective data, promoting a more meritocratic approach to hiring.

It can be so easy to get wrapped up in this optimism – but we mustn’t lose sight of the shadows that accompany these advancements.

Learn more: How is AI Impacting Recruiting?

Ethical Concerns and Challenges

Despite its many advantages, the use of AI in hiring presents several significant ethical concerns. At the forefront is the risk of algorithmic bias. AI systems learn from historical data, and if this data contains biases, the AI can replicate and even magnify these biases. Imagine an AI system trained on a dataset from a company that has historically favored male candidates. This system might perpetuate that preference, continuing to recommend male candidates over equally qualified female candidates, thereby reinforcing gender inequality in the workplace. A stark example is Amazon’s AI recruiting tool, which was abandoned in 2018 after it was discovered to penalize resumes that included the word “women’s,” reflecting inherent gender biases which the tech had learned from the dataset it was fed.

Bias extends beyond gender. A study by MIT and Stanford researchers found that facial recognition systems exhibited higher error rates for darker-skinned individuals and women, underscoring a broader risk of bias in AI technologies. In hiring, such biases could lead to discriminatory practices, undermining fairness and inclusivity. The implications are profound, shaping workplace diversity, company culture, and societal perceptions of equity. Without careful oversight, AI can become a tool of discrimination rather than progress, perpetuating racial, age, and socioeconomic biases by favoring candidates from privileged backgrounds.

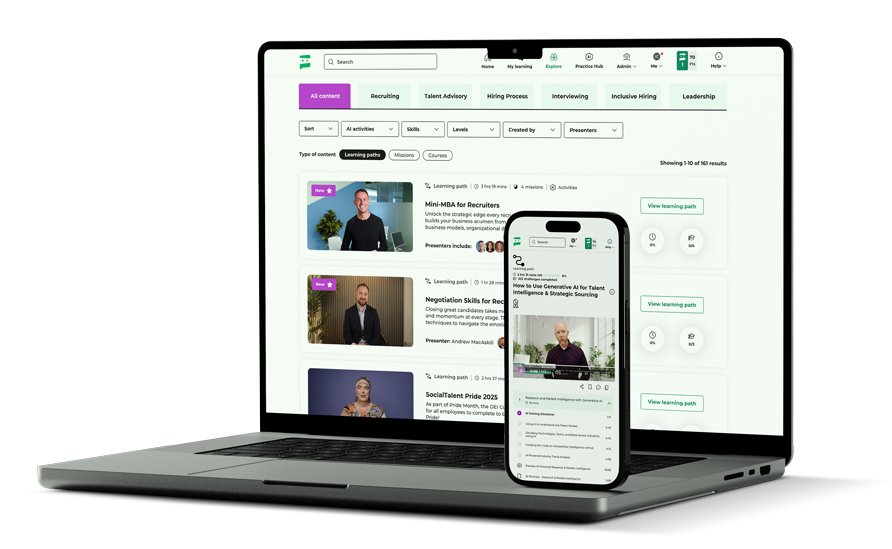

In her training on the SocialTalent platform Maisha L. Cannon, a recruiting AI expert, says that:

“A good artificial intelligence system is not just capable, but also ethical. It values each individual, ensures accuracy, and maintains transparency.”

So how do you go about ensuring that the AI you are using in your recruitment processes subscribes to this ethos?

The TIP Framework

TIP stands for Transparency, Inclusivity, and Protection. By following these guidelines, created by Maisha Cannon, you can mitigate the biases and dangers associated with using AI in hiring, while ensuring a sounder and fair outcome. We’re on the precipice of a new frontier when it comes to integrating technology like this into our recruiting processes. We have to set a strong, ethical foundation and build on that.

1. Transparency

As the name suggests, transparency is the cornerstone of ethical AI practice. Clarity is so important when it comes to the decision making process and guarantees that stakeholders understand the rationale behind AI recommendations. As HR Magazine states:

“Previously if a recruiter was biased, they’d impact a handful of people. If you have a bug in your AI model, it could be affecting hundreds of thousands of potential candidates.”

Transparency around the use of AI is being continually mandated by legislation, and with good reason – candidates and applicants need to be informed where AI is being used in the process so they can get a full understanding of how decisions are being made.

We spoke to Microsoft’s Thom Staight about this during a recent SocialTalent Live event, and he said there is huge responsibility on recruiters when it comes to the ethical use of AI:

2. Inclusivity

Something to be so mindful of as we integrate more with artificial intelligence in the talent sphere is around inclusivity. Every individual, regardless of their background, should have an equal shot in the hiring process. In her training on the SocialTalent platform, Maisha Cannon tells a story from her own experience with this; during a job search she switched her name from Maisha Cannon to ML Cannon and noticed a significant uptick in responses to her resume when her name and likely ethnicity were obscured.

It’s an important lesson to remember: AI trained on biased data will replicate such biases. If you are using AI you must enact some form of due diligence to ensure that the outputs aren’t subscribing to some ingrained algorithmic discrimination. It’s why the human touch point is so vital. AI cannot be left unchecked to make critical decisions.

Learn more: Balancing Act – Navigating the AI Revolution in TA

3. Protection

Protection emphasizes data security – a non-negotiable aspect in our digital age. Be mindful of what information you put into AI systems, and always consider data protection first. Candidate’s personal information is sensitive and must be handled with care.

The best way to ensure this is by speaking with your IT and HR teams. It can be a tricky minefield to cross so getting expert guidance is essential. Every organization and every country has different laws and requirements when it comes to AI and it’s important to understand how this impacts your use of the tech when hiring. It can be very easy to be swayed by the efficiency of AI, but data protection must usurp this desire.

Conclusion

According to a poll we conducted this year, 84% of organizations are using AI to some degree with their recruitment processes. The tide is turning quickly when it comes to this technology, but it’s imperative that we take stock and understand the ethical implications.

While AI has the power to revolutionize and improve the hiring experience, it also has the power to cause immense damage. Vigilance and knowledge are essential in this environment. The rise of AI has put a stark focus on the human also – we need to ensure that the technology is used to bolster and improve rather than cultivate bias and make poor hiring decisions.